Note to Abby: I did get distracted. Lemon juice next week.

In Friday’s post, I offered Jaminet’s Corollary to the Ewald Hypothesis. The Ewald hypothesis states that since the human body would have evolved to be disease-free in its natural state, most disease must be caused by infections. A consequence of the Ewald hypothesis is that, since microbes evolve very quickly, they will optimize their characteristics, including their virulence, depending on the human environment. If human-human transmission is easy, microbes will become more virulent and produce acute, potentially fatal disease. If transmission is hard, microbes will become less virulent, and will produce mild, chronic diseases.

Jaminet’s corollary is that such an evolution has been happening over the last hundred years or so, caused by water and sewage treatment and other hygienic steps that made transmission more difficult. The result has been a decreasing number of pathogens that induce acute deadly disease, but an increasing number that induce milder, chronic, disabling disease.

Indeed, most of the diseases we now associate with aging – including cardiovascular disease, cancer, autoimmune diseases, dementia, and the rest – are probably of infectious origin and the pathogens responsible may have evolved key characteristics fairly recently. Many modern diseases were probably non-existent in the Paleolithic and may have substantially changed character in just the last hundred years.

I predict that pathogens will continue to evolve into more successful symbiotes with human hosts, and that chronic infections will have to become the focus of medicine.

Is there evidence for Jaminet’s corollary? I thought I’d spend a blog post looking at gross statistics.

When did hygienic improvements occur?

Since the evolution of pathogens should have begun when water and sewage treatment were adopted, it would be good to know when that occurred.

Historical Statistics of the United States, Millennial Edition, volume 4, p 1070, summarizes the history as follows:

[I]n the nineteenth century most cities – including those with highly developed water systems – relied on privy vaults and cesspools for sewage disposal…. Sewers were late to develop because at least initially privy vaults and cesspools were acceptable methods of liquid waste disposal, and they were considerably less expensive to build and operate than sewers.

Sewers began to replace privy vaults and cesspools as running water became more common and its use grew. The convenience and low price of running water led to a great increase in per capita usage. The consequent increase in the volume of waste water overwhelmed and undermined the efficacy of cesspools and privy vaults. According to Martin Melosi, “the great volume of water used in homes, businesses, and industrial plants flooded cesspools and privy vaults, inundated yards and lots, and posed not just a nuisance but a major health hazard” (Melosi 2000, p 91).

Joel Tarr also notes the impact of the increasing popularity of water closets over the later part of the nineteenth century (Tarr 1996, p 183). Water closets further increased the consumption of water, thus contributing to the discharge of contaminated fluids.

The data is not really adequate to tell when the biggest improvements were made. The most relevant data series, Dc374 and Dc375, begin only in 1915. They show that investments in sewer and water facilities were high before World War I, fell during the war and post-war depression, were very high again in the 1920s, and fell again after the Great Depression. It’s likely that the peak in water and sewage improvements occurred before 1930. In constant dollar terms, investment in water facilities peaked in 1930 at 610 million 1957 dollars and didn’t reach that level again until 1955. Investment in sewer facilities peaked at 734 million 1957 dollars in 1936 – probably due to Depression-era public works spending – and didn’t reach those levels again until 1953.

It seems likely that hygienic improvements were being undertaken continuously from the late 1800s and were probably completed in most of the US by the 1930s; in rural areas by the 1960s. Systems to deliver tap water were built mostly in the last quarter of the 19th century and first half of the 20th. The first flush toilets appeared in 1857-1860 and Thomas Crapper’s popularized toilet was marketed in the 1880s.

Mortality

Historical Statistics of the United States, Millennial Edition, volume 1, p 385-6, summarizes the trends in mortality as follows:

Recent work with the genealogical data has concluded that adult mortality was relatively stable after about 1800 and then rose in the 1840s and 1850s before commending long and slow improvement after the Civil War. This finding is surprising because we have evidence of rising real income per capita and of significant economic growth during the 1840-1860 period. However, … urbanization and immigration may have had more deleterious effects than hitherto believed. Further, the disease environment may have shifted in an unfavorable direction (Fogel 1986; Pope 1992; Haines, Craig and Weiss 2003).

Of course, urbanization and a worsening of the disease environment would be expected to coincide: with lack of hygienic handling of sewage, cities were mortality sinks throughout medieval times and that would have continued into the 19th century. Under the Ewald hypothesis, we would expect microbes to have become more virulent as cities became more densely populated in the 1840s and 1850s.

We have better information for the post-Civil War period. Rural mortality probably began its decline in the 1870s becaue of improvements in diet, nutrition, housing, and other quality-of-life aspects on the farm. There would have been little role for public health systems before the twentieth century in rural areas. Urban mortality probably did not begin to decline prior to 1880, but thereafter urban public health measures – especially construction of central water distribution systems to deliver pure water and sanitary sewers – were important in producing a rapid decline of infectious diseases and mortality in the cities that installed these improvements (Melosi 2000). There is no doubt that mortality declined dramatically in both rural and urban areas after about 1900 (Preston and Haines 1991).

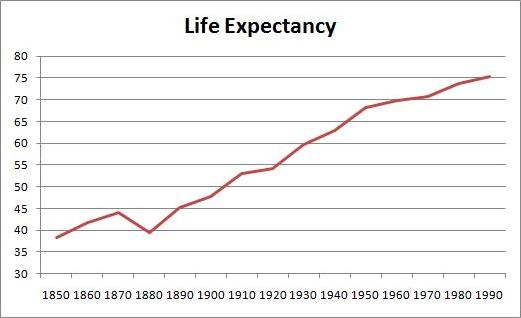

The greatest improvements in mortality occurred between 1880 and 1950. Here is life expectancy at birth between 1850 and 1995 (series Ab644):

Life expectancy was only 39.4 years in 1880, but increased to 68.2 years by 1950 – an increase of 28.8 years. In the subsequent 40 years, life expectancy went up only a further 7.2 years.

Causes of Death

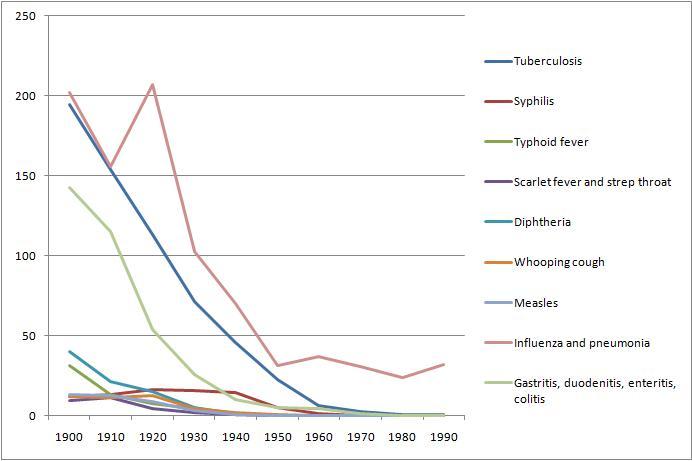

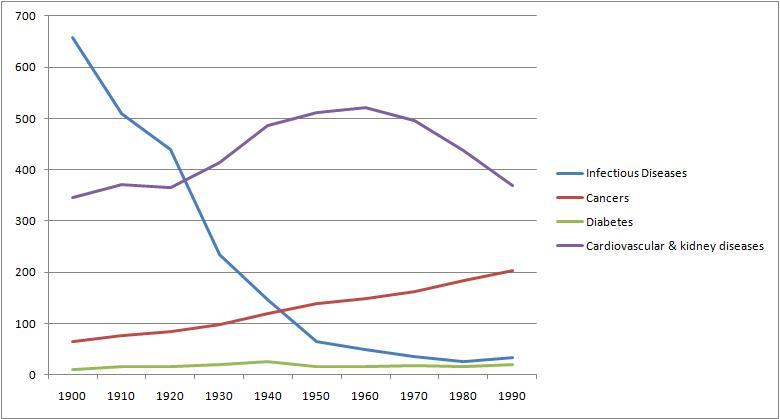

From Table Ab929-951 of volume 1, we can get a breakdown of death rates by cause from 1900 to 1990. Here are death rates from various infectious diseases:

And here for comparison are death rates from cancer, cardiovascular and renal diseases, and diabetes:

Overall, death rates have declined, consistent with rising life expectancy. However, death rates from chronic diseases have actually increased, while death rates from acute infections have, save for influenza and pneumonia, gone pretty much to zero.

Conclusion

Death rates from acute infections plummeted in the period 1880 to 1950 when hygienic improvements were being made. By and large, these decreases in infectious disease mortality preceded the development of antimicrobial medicines. Penicillin was discovered only in 1928, and by then mortality from infectious diseases had already fallen by about 70%.

We can’t really evaluate the Jaminet corollary from this data, other than to say that the data is consistent with the hypothesis. Nothing here rules out the idea that pathogens have been evolving from virulent, mortality-inducing germs into mild, illness-inducing germs.

Sometime later this year, I’ll look for evidence that individual pathogens have evolved over the last hundred years. It should be possible to find evidence regarding the germs for tuberculosis and influenza, since those continue to be actively studied.

There is great concern over the evolution of antibiotic resistance among bacteria. This data suggests that antibiotic resistance will not generate a return to the high mortality rates of the 19th century. Those mortality rates were high not due to a lack of antibiotics, but due to a lack of hygiene that encouraged microbes to become virulent.

As long as we keep our hands and food clean and our running water pure, we can expect mortality rates to stay low. Our problem will be a growing collection of chronic diseases.

Our microbes will want to keep us alive — that is good. But they will increasingly succeed at making us serve them as unwilling hosts. We will be increasingly burdened by parasites.

Diet, nutrition, and antimicrobial medicine are our defenses. Let’s use them.

Hi Pauls,

Sounds logical. I wonder about the CVD death rates. Looks like there is a big peak in the 60ies and then goes down almost to the level of 1900.

Are these the effects of modern health care/emergency medicine, distorting the picture of “ever increasing CVD”? I tend to think so. It’s much more likely to survive a heart attack nowadays then in 1900. But can we pin a number or % to it, to straighten out the curve so to speak and show the real increase in CVD over the last century?

Sorry for the plural, Paul!

Paul:

Seems plausible to me, as I am a long-term fan of the sanitation-infectious diseases negative correlation (read: “antivaxxer”). That is, I shrug at the thought that the big pharma actually adopts the theory and starts massive production. Which is far more likely than future focusing on “Diet, nutrition, and antimicrobial medicine (=medicines that actually fight existing illnesses in case diet and nutrition fail – not vaccines)”

Hi Franco,

Yes, I think that’s medical science at work. At great expense, they figured out how to let people merely be crippled of heart disease instead of die. But mortality rates are still at 1900 levels, incidence rates much higher.

I don’t have good data on the incidence issue but I’ll keep an eye out for it. I need to find data on incidence of non-fatal diseases like autoimmune disorders.

Hi Tomas,

I think medicine and pharma will move this way … but there may need to be a big regulatory overhaul to make it more profitable to do truly effective drug development. Right now they make most of their money from treatments that never cure. Management of chronic disease creates recurring revenue, cures are profitless, so antimicrobial drugs don’t get developed.

Hi Paul – just wanted to add that in the industrial cities nutritional deficiencies were rampant (starting in England and moving over to the US several decades later, as near as I can tell from the somewhat Anglocentric research studies, especially as refined flour became more available. It was common to wean babies to bread and water (bread and milk if you were lucky). Combine soot, all-day indoor working conditions for everyone including children… you’ve got a myriad of deficincies and weakness to viruses given lack of vitamin D…

Thanks, Emily. Yes, there was a rickets epidemic at the turn of the century, all kinds of problems. I complain about the USDA food guidelines but wealth and a more efficient food delivery system have really improved diets.

Unfortunately the IQ data shows the improvement in diet has topped out and may have reversed …

Paul,

But what about the our good bacteria? If we are going to move into more chronic infection treatment I think it is vital to have for sure procedure that can fully recover optimal flora within sort time after ceaseing of antimicrobial therapy. Otherwise, we are just open to more chronic infections.

Likewise, antimicrobial medicine will have to constantly change to avoid resistance… how many times can it change before something like absolute resistance happens? Can that happen?

Hi Bill,

Yes, broad-spectrum antibiotics are very far from an ideal antimicrobial therapy. They don’t even touch viruses which are very common.

This is the challenge. But molecular medicine opens the possibility of tightly targeted antimicrobials that attack specific species.

Also, therapies can cooperate with the body’s immune response. The immune system naturally permits gut flora while vigorously attacking systemic pathogens.

So I think the future will have therapies that avoid that difficulty.

Re antimicrobial resistance, that is a big problem in the gut because there are so many species and gene exchange is so common. Inside the body, inside cells, there are few pathogens and few or no opportunities for gene exchange. So systemic pathogens tend not to develop antimicrobial resistance easily.

I am not saying that given the current state of medicine, therapy for chronic diseases is obvious. In fact I believe effective therapy needs to begin with diet and nutrition — thus our book!

Best, Paul

I don’t know about this.

Too much correlation.

And the data isn’t great. In 1900 it is easy to say when someone dies of infectious disease. HArd to say when someone dies from cancer, heart attack, etc.

Possibly infectious diseases, by killing weaker people off in childhood, now allow people with weaker systems to survive and breed. Asthma, for instance.

Tying that into general auto-immunte disorders might make more sense.

But way off topic. Given how bad Americans live and eat right now (bad diet, no exercise, and bad macronutriets) all these seems way to far off. Focus on low-hanging fruit– it is easy and cheap to change your diet.

Paul,

An interesting hypothesis (Ewald) and corollary (Jaminet). I can certainly see how water-borne bacterial illnesses would evolve to become more chronic as their routes from one host to the next were greatly reduced, forcing them to “wait longer” to infect the next host. It’s much more difficult to see how air-borne pathogens, or even those spread by physical contact, would have any pressure to become more chronic, especially since the trend is for more and more people to live in crowded urban areas with a high density of human contacts as opposed to rural areas with a low denisity–perhaps bacterial infections would have such pressure because severe cases would immediately be treated with antibiotics, favoring the strains that caused less severe cases that wouldn’t rise to the level of treatment initiailly, so maybe, since the advent of antibiotics, they have become more chronic. However, even in that case, it would seem to make as sense for the bacterium to evolve towards greater infectivity as much as toward a chronic form, because then it could infect the next host before being controlled by the antibiotics. And for viral infecions, for which no good therapies exist even now (except in the case of HIV), I can see no good reason for the evolution toward chronic forms.

Also, I’m wondering how this would work for something like Lyme disease, which is picked up by a human from an animal (a tick), and where a human seems to be a dead-end host (I know that many in the Lyme community disagree but there is virtually no good evidence for human-to-human spread). Lyme and other tick-borne illnesses are blamed for all sorts of chronic problems, but I can see no reason at all for them to evolve in this direction–in fact, given the explosion in the deer population due to restrictions on hunting in areas near urban centers, the pressure would seem to be the other way, toward an acute and fairly ravaging illness, where the Lyme bacteria multiply like crazy and disable or kill their host relatively rapidly, because by the time it is immobilized or dies they have already moved on to the next, readily available host. The same holds for other tick-borne illnesses such as Babesia, Bartonella, et al. Humans who have the bad luck to pick these up should get very sick very quickly, not have a chronic, smoldering disease–there’s nothing in it for the bacteria to evolve that way, given that the human is not even the”‘intended” host.

I’m not nitpicking, I’m really trying to understand how everything fits into the picture, and I have a personal stake in it–I have tested positive for Lyme antibodies as well as for an antibody pattern that indicates “chronic recurrent” Epstein Barr virus infection, not to mention high titers for C. pneumoniae. While my number one hypothesis at this point is that my fatigue, joint pain, headaches, and other symptoms too numerous to list, are caused in large part by chronic infections, I really haven’t heard any truly compelling arguments for these particular illnesses to have evolved toward chronic forms (with the possible exception of the C. pneumoniae). Of course, I’m perfectly willing to be convinced by having a course of antibiotics successfully eliminate or at least noticeably reduce my symptoms, but so far no luck.

But the discussion and evidence are both in their infancy, and I look forward to hearing what you and others think as it continues to be explored.

Hi Eric,

Great observations!

I’m sorry to hear of your illness. You really got an unfortunate set of pathogens. The C pn – EBV combination puts you at high risk for MS, and Lyme can be quite a disaster.

I think re Lyme, since humans are a dead-end host, the primary adaptations should be to their primary hosts. So their virulence in humans may be reduced due to maladaptation. But it’s not obvious that Lyme has gotten less virulent – if anything its recent emergence as a human disease suggests it has grown more virulent. That would fit with your idea that not culling deer would promote its virulence.

But if they evolved for chronic disease in deer, then they should be even less virulent in humans.

Re airborne pathogens over the last century, the decline of smoking may have significantly affected the virulence of airborne pathogens. Smoking-injured lungs develop infections more easily. With fewer smokers, effectively transmission is harder.

C. pneumoniae has evolved two forms, one that enters primarily via the lungs and the other primarily via the gut. They tend to infect different organs internally. It is a pathogen that has definitely become adapted to humans specifically. It would be interesting to get detailed genetic population studies and try to reconstruct its evolutionary history. C. pneumoniae was originally a zoonotic pathogen but it has now acquired many human-specific adaptations.

In the US “urbanization” has largely consisted of suburban growth rather than higher densities in the urban core. Probably this evolution is toward greater hygiene, despite higher population numbers.

This is all brainstorming, but fun.

Have you tried antibiotics? Combination protocols are usually most effective.

Best, Paul

I’ve been on a number of antibiotics as well as antivirals for several months now, with little or no noticeable improvement. I don’t want to stop until I have really given them a good long try, which my doctor tells me is at least six months.

One of the reasons I don’t want to put all my eggs in the infection basket is that I do have other things going on that might be contributing to, or even the major cause of, my symptoms, including sleep apnea and food allergies (I posted earlier in the Experiences Good and Bad on PHD post if this sounds familiar). I just got a dental appliance to help with the sleep apnea (after complete failure of CPAP, even though it controlled the apnea I was still exhausted, quite possibly because the CPAP itself wakes you up as much or more than the apnea) and found that it did seem to help somewhat, but caused jaw pain. After a few nights without it I seem back to square one in terms of fatigue. The allergies are so numerous that I really don’t know what to do about them in any practical manner.

So you can see, a lot going on. I have a feeling the same is true for a lot of people who get a diagnosis of, e.g., Lyme, and go down the road of Lyme treatment but don’t consider other possible causes of their problems. If the treatment works, great, but if it doesn’t you’re left somewhat at sea. Fortunately, my Lyme doc is also very aware of the other possibilities and, while she, too, thinks the best guess at this point is still Lyme and other infections, we’re both open to exploring other possibilities.

Hi Eric,

Food allergies indicate a ravaged gut, if the gut is leaky then almost any protein can become an allergen.

Unfortunately it’s tricky bootstrapping yourself out of this situation. Antibiotics are needed for the systemic infections, but often make the gut problems worse.

Sounds like you have a good doc. That’s important!

Best, Paul

Yes, lots going on and a tricky business, as you say. I’m sticking with the antibiotics for now, but if they are not working in any significant way after six months, I’m inclined to experiment with fasting, either just water fasting or coconut oil-supplemented; I’d be inclined to go first for a fairly long time, about ten days to two weeks, to sort of jumpstart the autophagy process (and whatever other processes that occur with fasting) then move to IF. In part my inclination toward fasting comes from the multitude of anecdotal reports, including some on your website, of fairly dramatic health improvements. I suspect that there is a lot more than autophagy going on, and I’m pretty disappointed that I can’t find many scientific discussions of the effects of fasting on health problems, at least not general discussions (I know there are specific discussions around specific conditions, such as rheumatoid arthritis).

The hygiene hypothesis claims that some exposure to friendly germs is necessary for the development of the immune system. We have coevolved with some germs and modern paranoia about kids playing with soil, pets and other kids may contribute to dysfunctional immune systems. Furthermore with a varied microbial environment bugs may keep one another in check.

Remember our ancestors consumed a variety of fruit, vegetables and teas. Plants are a rich source of natural antibiotics that unlike pharmaceuticals evolve around drug resistance.

.

Speaking of infections, has anyone seen the documentary, “Babies”? It is a true eye-opener when you see the African baby sucking on a bone he finds in the dirt, while the American baby is given a nice clean banana at a nice clean table. The Mongolian baby is sitting in a bath in a basin that is also used to wash out the offal from a just-slaughtered animal, when another animal comes and takes a drink from the baby’s bathwater. The American baby, meanwhile, is sitting in her mom’s arms in a white porcelain bathtub filled with clean, warm water. Presumably, all the kids grow up adapted to the bugs and viruses that surround them, and with immune systems that are adapted to protect them from whatever diseases those microbes cause. In terms of immunity, my guess is that the Mongolian and African kids (if they survive to adulthood) would have more protection against infections than the American kid or the Japanese kid, who also grows up in a relatively antiseptic environment. Anyways, it was a good movie. I recommend.

Hi Maggy!

I didn’t saw this exact documentary but the link between “too clean” environment and autoimmune disorders, particulary allergic rhinitis (hay fever) and neurodermatitis, is a reocuring theme in german tv. It looks like kids growing up on the country side or directly on farms have a much lesser danger to developing those (also east germany when still seperated was supposedly a less clean environment then the west and there were also only half of autoimmune disorders).

Now, germans in general are still one of the most tidy and clean folks in the world and I blame the tv commercials for further reinforcing it with ever more effective, antibacterial, antiseptic household cleaners, washing powders and whatever.

Thus all MD’s almost univocaly state: let your kids play more in the dirt and mud! If your baby’s pacifier falls down to the floor, don’t fret, and just put it back!

There’s even a group of scientiests testing some type of tapeworm on people to cure allergic rhinits.

I think the logic behind is to let the immune system work on a real enemy and it forgets to attack itself (or harmless substances).

Reportedly they have a good success rate.

Anyways, that’s quite the antithesis to what Paul posted here or is it not?

Franco,

I think it is not contradictory. You only need to differentiate between sewages containing human-producted waste (bad: imagine a 18th century town where sewages = street) and mud not containing feces (good). In the latter “setting”, children have higher changes of creating powerful and natural defense mechanisms that do not backfire.

re “too clean”: I’d say this analogy does not fit. Kids growing up in cities face higher volumes of pollution, which I understand as “more dirty”. Yet it is correct that farm kids are more likely to play in and with dirt. So the environment is just different, and farm kids beat city kids 2-0 in health-promoting factors! 😀

Paul, you mention that “if the gut is leaky then almost any protein can become an allergen.”

Why does it seem that that people who completely cut out gluten (and have clean diets), have such a large problem with it when start to eat it again. One would think that they would have healed their gut and have a greater tolerance for the gluten at that point (but from the side effects i’ve heard of with reintroduction, that seems to just not be true.

There seems to be a period of adapting to food and I feel that even though you should rotate your food a little, our bodies do seem to like similar food a lot of the time (If the body senses it is a good fuel).

Hi Aaron,

It’s a good question. I see basically two possibilities.

(1) Wheat induces leaky gut and an influx of gut endotoxins. The body has evolved to set the influx of endotoxin to a certain level, http://perfecthealthdiet.com/?p=1765. When you eat wheat every day, the immune system sees endotoxins every day and exerts strong control over gut bacterial populations to keep endotoxins down.

When you stop eating wheat, the gut lining tightens and lets fewer endotoxins in. If your no-wheat diet is also low-carb, bacteria have less to eat and endotoxin influx falls even more. The immune system relaxes to let gut bacteria multiply, until endotoxin influx returns to the evolutionary set point.

Now when you eat wheat with this much larger gut flora population, you get a sudden leakiness of the gut. Meanwhile your much larger gut bacteria population goes to town on wheat carbs. There is a huge influx of endotoxins. You feel lousy.

It takes a week or more for the immune system to activate and radically pare down the gut bacterial population.

(2) When you eat wheat every day, you develop a gut flora that is good at digesting wheat, including toxic wheat proteins. When you don’t eat wheat, you lose those species. Then when you suddenly add wheat, you get the full brunt of wheat toxins without any detoxification from wheat-adapted gut bacteria.

I think (1) is most likely.

Best, Paul

Paul-

Have you seen the new Stanford site on chronic infections and disease? It’s at http://chronicfatigue.stanford.edu/. The research fits very well with your hypotheses. As a sufferer of the horribly-named “Chronic Fatigue Syndrome”, I find the research studies on both c. pneumoniae and enteroviruses especially compelling.

–Anne

Hi Anne,

Thanks for letting me know about the Stanford project, that’s heartening to see!

Chronic illnesses are difficult. Mine turned out to be one of the easiest to cure, and it took me 17 years or so to do it.

We really need the doctors/scientists to come up with better detection/diagnosis methods and with better antimicrobials. So it’s great to see a top school devoting resources to the problem.

Best, Paul

I wonder if you can offer any specific recommendations for getting rid of cryptosporidium? I have just learned that I have a cryptosporidium infection (protozoal) and think that it may be the cause of long-term problems that have responded to no other treatment. (I have tried ALL the diet protocols). Any ideas of how to get rid of this infection?

Hi Siobhan,

Our basic diet should be good – with protozoa you don’t want to go too low-carb / ketogenic.

To learn how to get rid of it, talk to a doctor or infectious disease specialist. There are also resources online, here is the CDC’s take: http://www.cdc.gov/parasites/crypto/treatment.html.