Happy Mother’s Day!

Mother’s Day seems an auspicious time to begin a series on nutrition in pregnancy. It is an important topic, as I believe pregnant mothers are often alarmingly malnourished.

Mother’s Day seems an auspicious time to begin a series on nutrition in pregnancy. It is an important topic, as I believe pregnant mothers are often alarmingly malnourished.

Triage Theory

“Triage theory,” put forward by Bruce Ames [1], is an obviously true but nevertheless important idea. It offers a helpful perspective for understanding the consequences of malnourishment during pregnancy

Triage theory holds that we’ll have evolved mechanisms for devoting nutrients to their most fitness-improving uses. When nutrients are scarce, as in times of famine, available nutrients will be devoted to the most urgent functions – fuctions that promote immediate survival. Less urgent functions – ones which affect end-of-life health, for instance – will be neglected.

Ames and his collaborator Joyce McCann state their theory with, to my mind, an unduly narrow focus: “The triage theory proposes that modest deficiency of any vitamin or mineral (V/M) could increase age-related diseases.” [2]

McCann and Ames tested triage theory in two empirical papers, one looking at selenium [2] and the other at vitamin K [3]. McCann & Ames used a clever method. They used knockout mice – mice in which specific proteins were deleted from the genome – to classify vitamin K-dependent and selenium-dependent proteins as “essential” (if the knockout mouse died) or “nonessential” (if the knockout mouse was merely sickly). They then showed experimentally that when mice were deprived of vitamin K or selenium, the nonessential proteins were depleted more deeply than the essential proteins. For example:

- “On modest selenium (Se) deficiency, nonessential selenoprotein activities and concentrations are preferentially lost.” [2]

- The essential vitamin K dependent proteins are found in the liver and the non-essential ones elsewhere, and there is “preferential distribution of dietary vitamin K1 to the liver … when vitamin K1 is limiting.” [3]

They also point out that mutations that impair the “non-essential” vitamin K dependent proteins lead to bone fragility, arterial calcification, and increased cancer rates [3] – all “age-related diseases.” So it’s plausible that triage of vitamin K to the liver during deficiency conditions would lead in old age to higher rates of osteoporosis, cardiovascular disease, and cancer.

Generalizing Triage Theory

As formulated by Ames and McCann, triage theory is too narrow because:

- There are many nutrients that are not vitamins and minerals. Macronutrients, and a host of other biological compounds not classed as vitamins, must be obtained from food if health is to be optimal.

- There are many functional impairments which triage theory might predict would arise from nutrient deficiencies, yet are not age-related diseases.

I want to apply triage theory to any disorder (including, in this series, pregnancy-related disorders) and to all nutrients, not just vitamins and minerals.

Macronutrient Triage

Triage theory has already been applied frequently on our blog and in our book, though not by name. It works for macronutrients as well as it does for micronutrients.

Protein, for instance, is preferentially lost during fasting from a few locations – the liver, kidneys, and intestine. The liver loses up to 40 percent of its proteins in a matter of days on a protein-deficient diet. [4] [5] This preserves protein in the heart and muscle, which are needed for the urgent task of acquiring new food.

Protein loss can significantly impair the function of these organs and increase the risk of disease. Chris Masterjohn has noted that in rats given a low dose of aflatoxin daily, after six months all rats on a 20 percent protein diet were still alive, but half the rats on a 5 percent protein diet had died. [6] On the low-protein diet, rats lacked sufficient liver function to cope with the toxin.

Similarly, carbohydrates are triaged. On very low-carb diets, blood glucose levels are maintained so that neurons, which need a sufficient concentration gradient if they are to import glucose, may receive normal amounts of glucose. This has misled many writers in the low-carb community into thinking that the body cannot face a glucose deficiency; but the point of our “Zero-Carb Dangers” series was that glucose is subject to triage and, while blood glucose levels and brain utilization may not be diminished at all on a zero-carb diet, other glucose-dependent functions are radically suppressed. This is why it is common for low-carb dieters to experience dry eyes and dry mouth, or low T3 thyroid hormone levels.

One “zero-carb danger” which I haven’t blogged about, but have long expected to eventually be proven to occur, is a heightened risk of connective tissue injury. Carbohydrate is an essential ingredient of extracellular matrix and constitutes approximately 5% to 10% of tendons and ligaments. One might expect that tendon and ligament maintenance would be among the functions put off when carbohydrates are unavailable, as it takes months for these tissues to degrade. If carbohydrates were unavailable for a month or two, there would be little risk of connective tissue injury. Since carbohydrate deprivation was probably a transient phenomenon in our evolutionary environment, except in extreme environments like the Arctic, it would have been evolutionarily safe to deprive tendons and ligaments of glucose in order to conserve glucose for the brain.

Recently, Kobe Bryant suffered a ruptured Achilles tendon about six months after adopting a low-carb Paleo diet. It could be coincidence – or it could be that he wasn’t eating enough carbohydrate to meet his body’s needs, and carbohydrate triage inhibited tendon maintenance.

Triage Theory and Pregnancy-Related Disorders

I think triage theory may helpfully illuminate the effects of nutritional deficiencies during pregnancy. When a mother and her developing baby are subject to nutritional deficiencies, how does evolution partition scarce resources?

Nutritional deficiencies are extremely common during pregnancy. For example, anemia develops during 33.8% of all pregnancies in the United States, 28% of women are still anemic after birth [source].

It’s likely that widespread nutritional deficiencies impair health to some degree in most pregnant women.

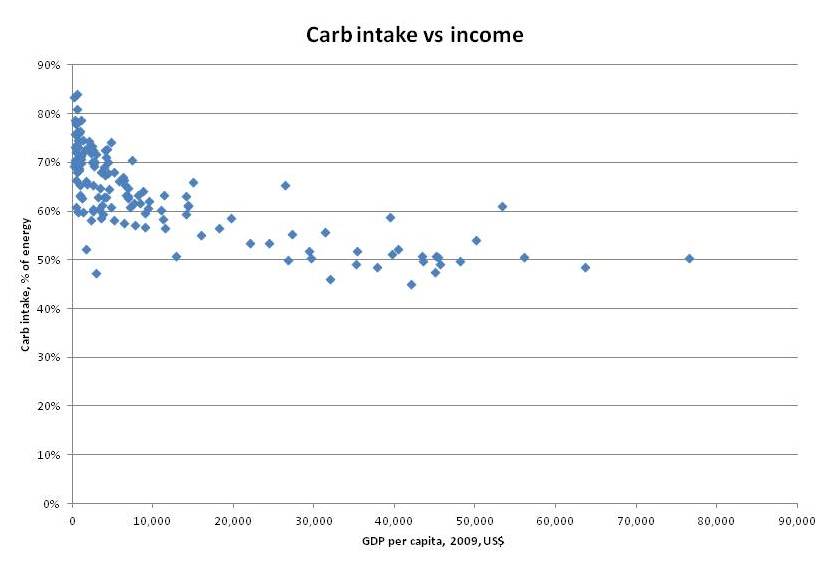

Those who have read our book know that we think malnutrition is a frequent cause of obesity and diabetes. Basically, we eat to obtain every needed nutrient; if the diet is unbalanced, then we may need an excess of fatty acids and glucose before we have met our nutritional needs. This energy excess can, in the right circumstances, lead to obesity and diabetes.

But obesity and diabetes are common features of modern pregnancy. Statistics:

- 5.7% of pregnant American women develop gestational diabetes. [source]

- 48% of pregnant American women experience a weight gain during pregnancy of more than about 35 pounds. [source]

I take the high prevalence of these conditions as evidence that pregnant women are generally malnourished and the need for micronutrition stimulates appetite, causing women to gain weight and/or develop gestational diabetes.

Another common health problem of pregnancy is high blood pressure: 6.7% of pregnant American women develop high blood pressure [source]. This is another health condition which can be promoted by malnourishment.

It’s likely that nutritional deficiencies were also common during Paleolithic pregnancies. If so, there would have been strong selection for mechanisms to partition scarce nutrients to their most important uses in both developing baby and mother.

A Look Ahead

So:

- Nutritional deficiencies are widespread during modern pregnancies.

- They probably lead to measurable health impairments and weight gain in many pregnant women.

- The specific health impairments that arise in pregnant women or their babies are probably determined by which nutrients are most deficient, and by evolutionary triage which directs nutrients toward their most important functions and systematically starves other functions.

- Due to variations in how triage is programmed, deficiency of a nutrient during pregnancy may present with somewhat different symptoms than deficiency during another period of life.

This series will try to understand the effects of some common nutritional deficiencies of pregnancy. Triage theory may prove to be a useful tool for understanding those effects. Based on the incidence of possibly nutrition-related disorders like excessive weight gain, gestational diabetes, and hypertension, it looks like there may be room for significant improvements to diets during pregnancy.

Recent Comments